What Americans really think about A.I.

The Trump administration's AI Action Plan received more than 10,000 comments. We analyzed all of them to find out how Americans feel about artificial intelligence and its impact on jobs, human rights, competition with China and more.

ANALYSIS By Tekendra Parmar | Contributing Editor

On April 24, the White House released 10,066 public comments submitted in response to its AI Action Plan. At Compiler, we analyzed the full dataset using machine learning and sentiment analysis tools to understand who submitted feedback, what they said and how they felt.

What we found was not a tech-lash, but a textured debate: Individuals far outnumbered companies in the response pool, and their concerns centered on copyright, labor, human rights and data governance. While there was plenty of skepticism, especially around how artificial intelligence is trained and deployed, the overall emotional tone was surprisingly balanced—and in some cases, cautiously optimistic.

While the administration has made clear that it intends to chart a path that emphasizes innovation over regulation, the overwhelming amount of public feedback shows that its AI policy will not just be scrutinized by companies, lobbyists and frontier labs, but by everyday Americans.

Who commented on the plan

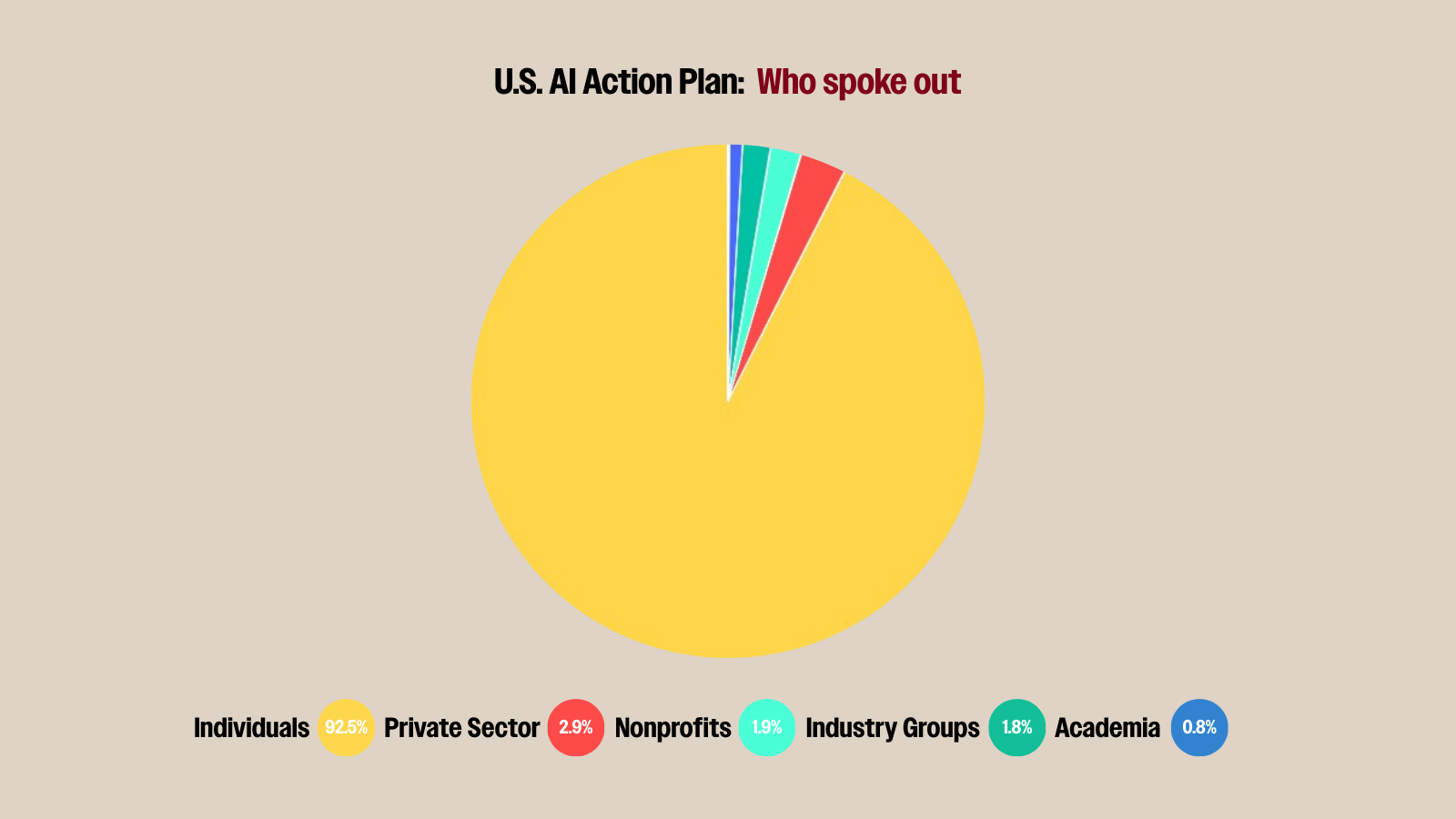

Our analysis found the overwhelming number of responses—more than 90%—came from individuals writing on behalf of themselves rather than their company or interest group. Private sector respondents made up the second largest group, about 3% of the respondents, followed by nonprofits at about 2%.

What we’re seeing in the dataset seems to be a population coming to terms with an ever-encroaching technology and one that is deeply engaged in the conversation around how it should be administered. While there are diverging views on how to regulate AI, even within similar groups, it's clear that Americans are paying attention and more aware of how AI may benefit or harm their lives.

What are people talking about?

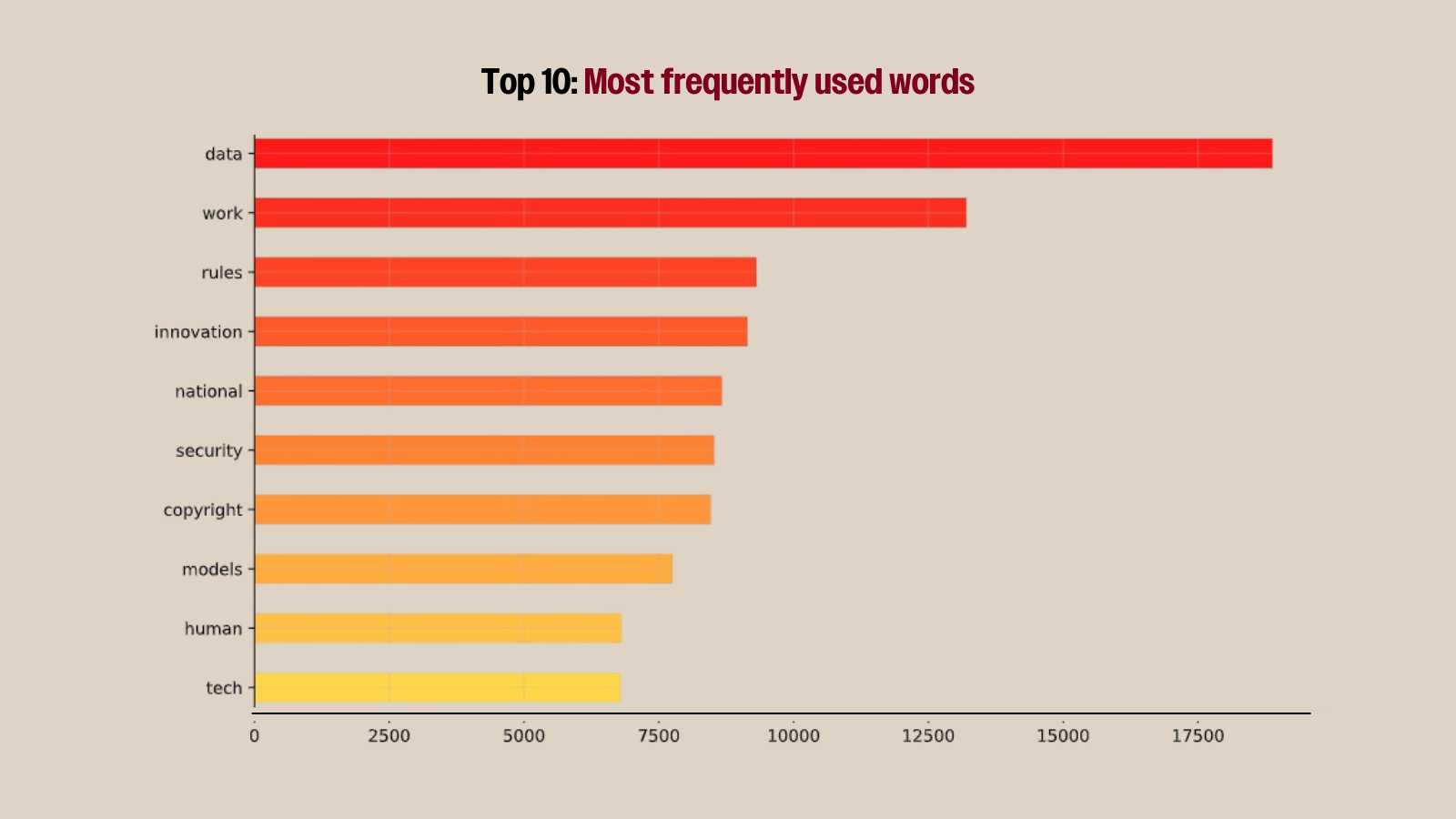

To understand what Americans were actually talking about, Compiler ran the full dataset through an open-source machine-learning model that surfaces big-picture themes in large texts. A quick analysis of the words that appeared the most in the text? “Data,” “work,” “rules,” and “innovation.”

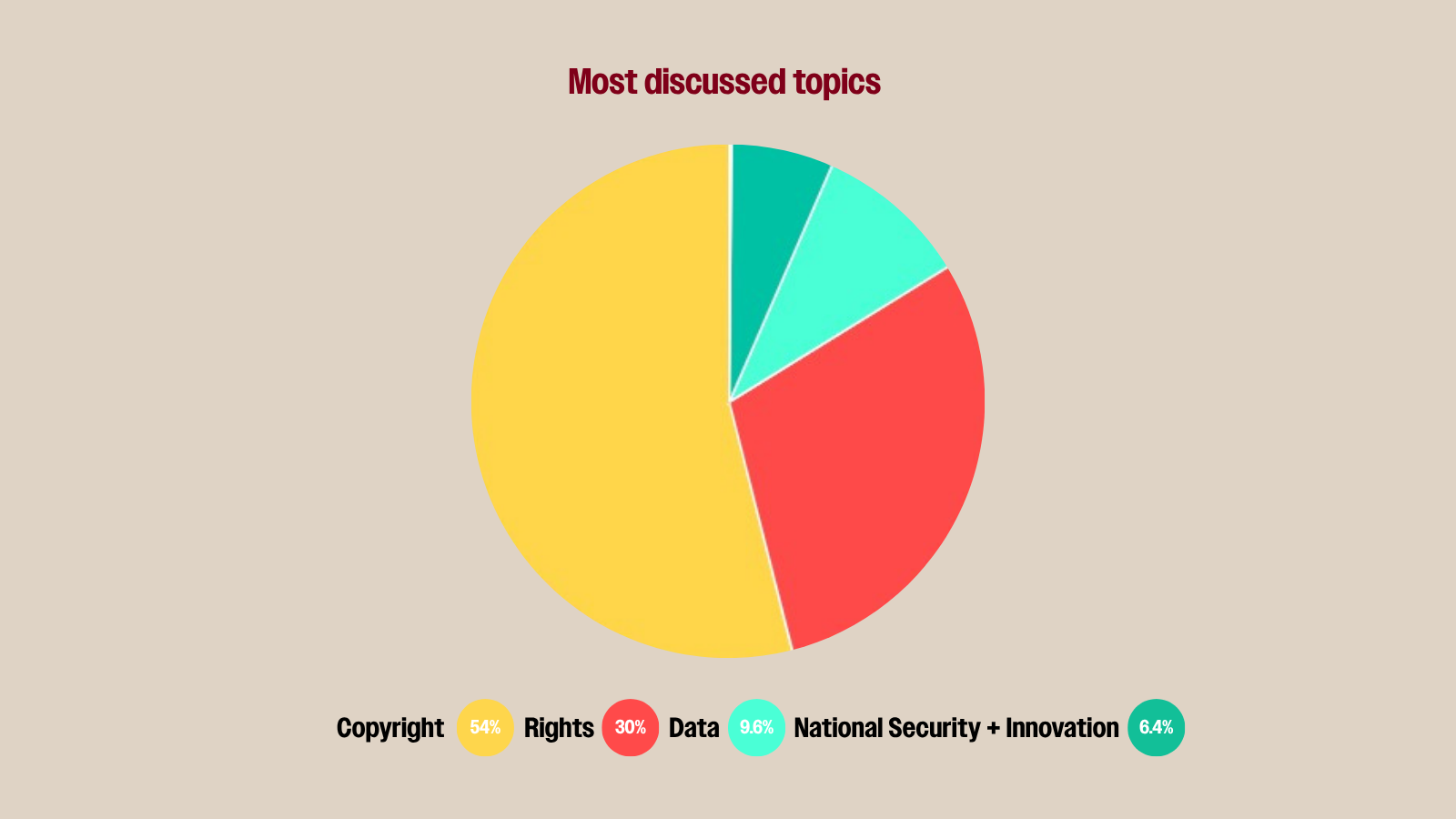

The same model helped decipher the most common themes present in the feedback. We found that more than 50% of respondents were concerned with copyright issues. These individuals discussed topics such as data and labor used to train large language models, whether artists, programmers or writers.

Even when it comes to issues such as copyright, individual respondents can be quite nuanced in their views around regulation. Some were concerned about how the U.S. Copyright Office determined human authorship when it comes to images partially edited or generated using artificial intelligence. Many were concerned with how frontier AI labs use creator content to train models.

But even influential organizations are split on how to fairly compensate creators whose work has been used to train models. On one hand, OpenAI is lobbying to expand the definition of fair use while record industry groups advocate for licensing deals. Meanwhile, some nonprofits also argue that licensing deals won't go far enough to compensate creators for their work.

This was followed by another 30% preoccupied with how AI would impact human rights. Behind that topic, a cohort of individuals appeared predominantly preoccupied by AI’s use of data. While they discussed copyright issues, these respondents appeared more concerned with other ancillary data issues ranging from data centers to national security.

How do people feel about AI

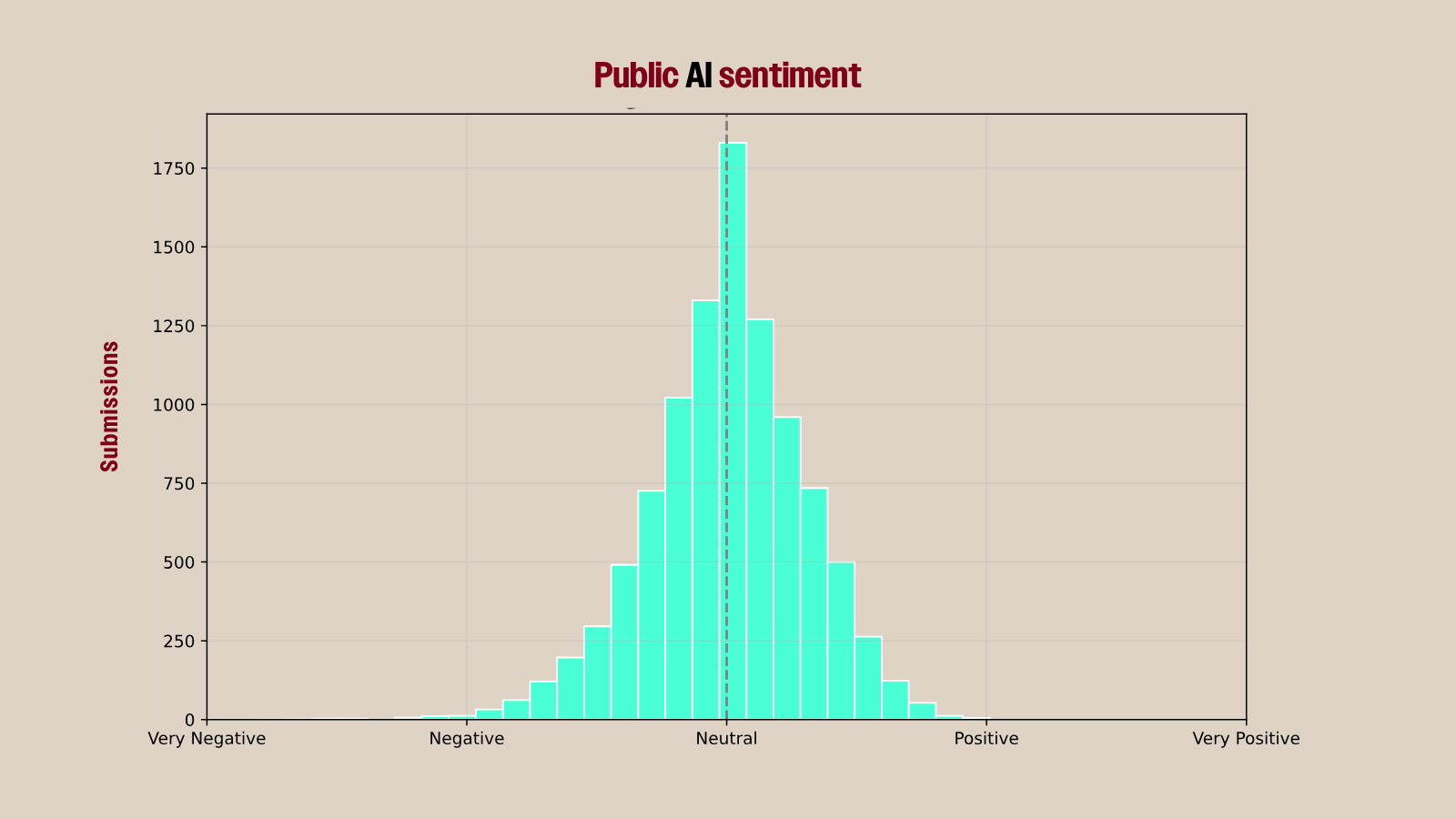

The collective feedback from the AI Action Plan is one of the largest public datasets on AI, and an opportunity to evaluate the emotional temperature of the country when it comes to this rapidly emerging technology. Using sentiment analysis, Compiler ran each comment through a tool that scores text across eight core emotions—anger, fear, anticipation, trust, surprise, sadness, joy and disgust—along with ranking for positive or negative sentiments. The result offered a rare snapshot of how Americans are feeling about AI in their own words.

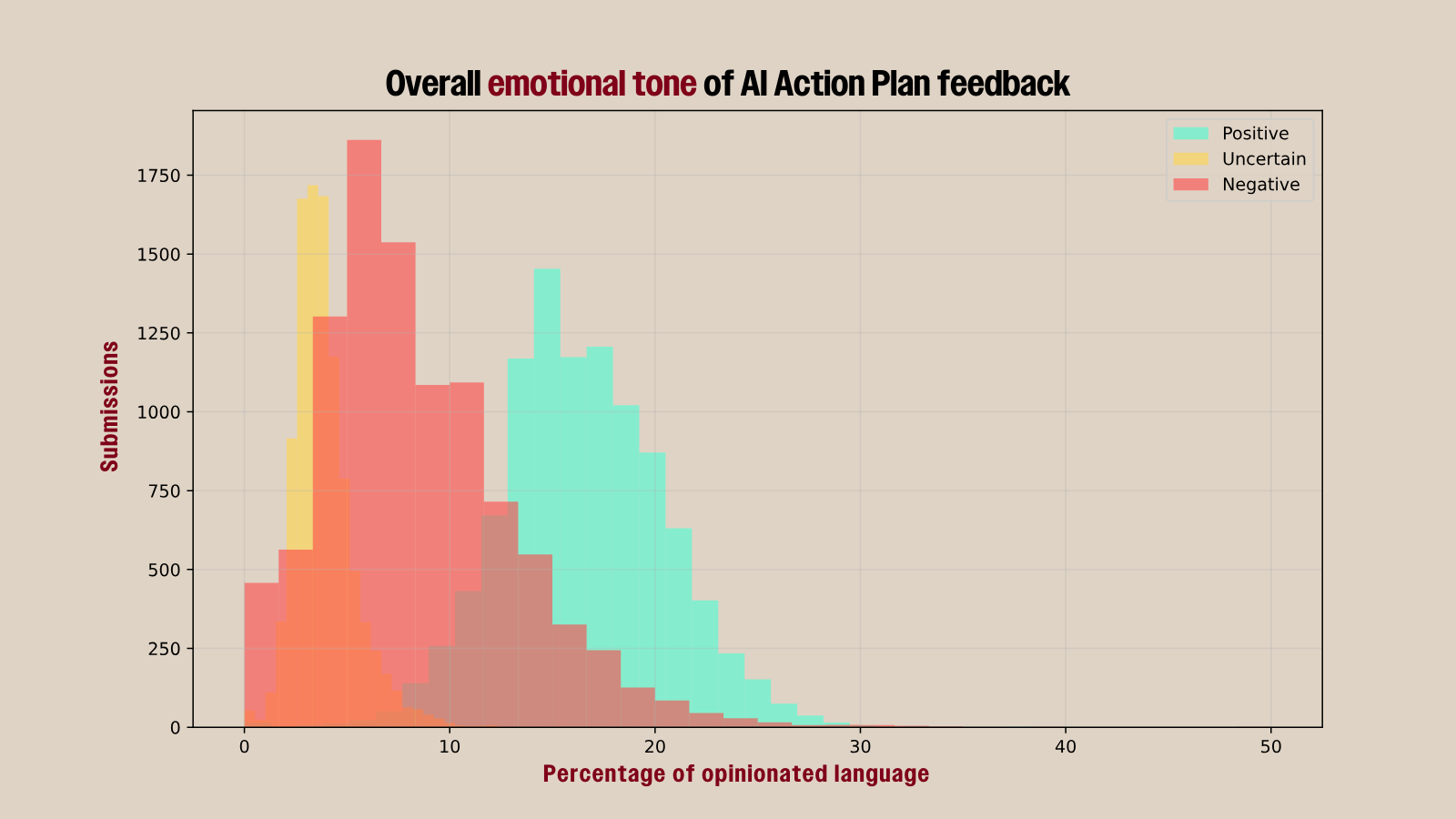

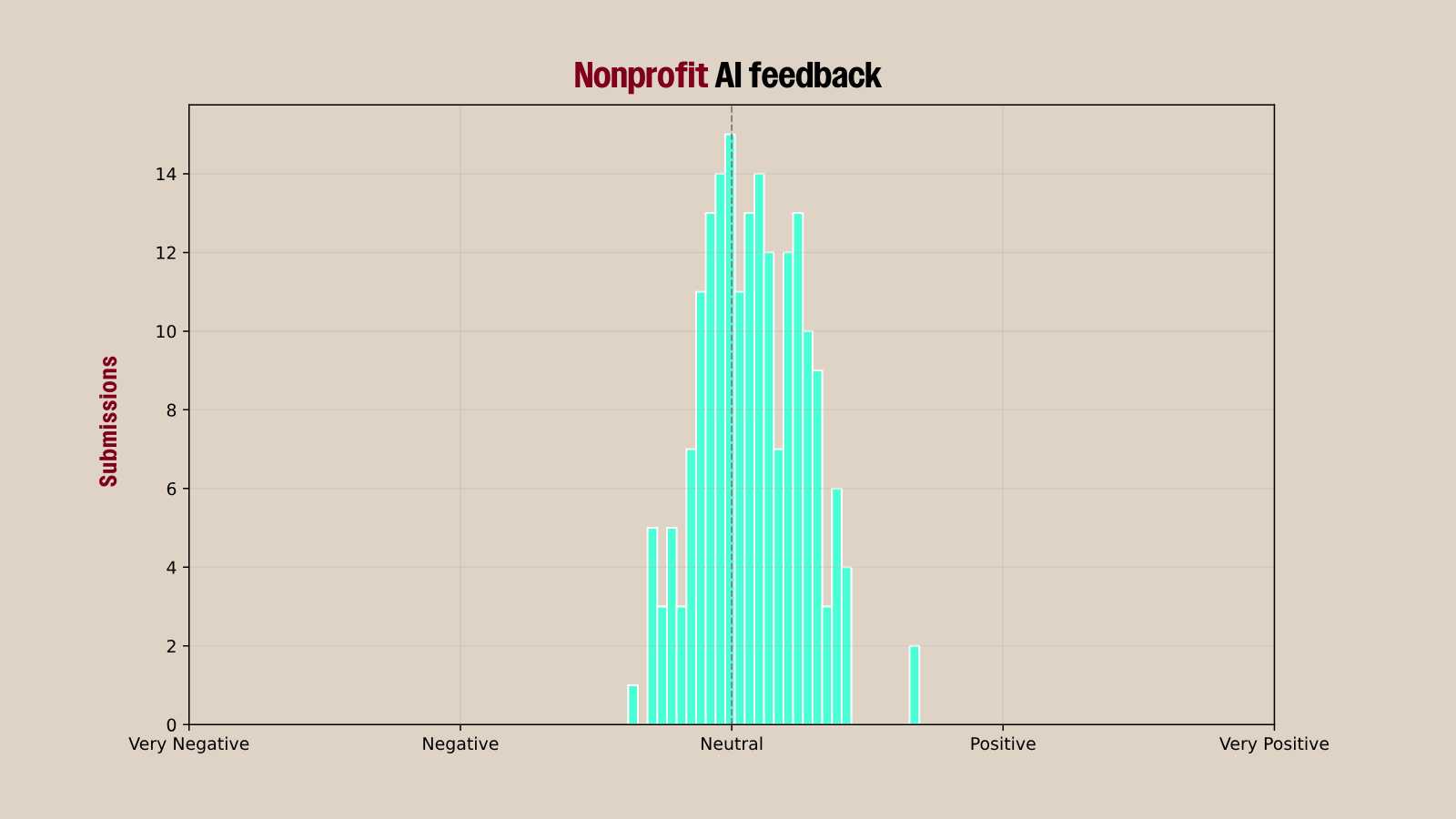

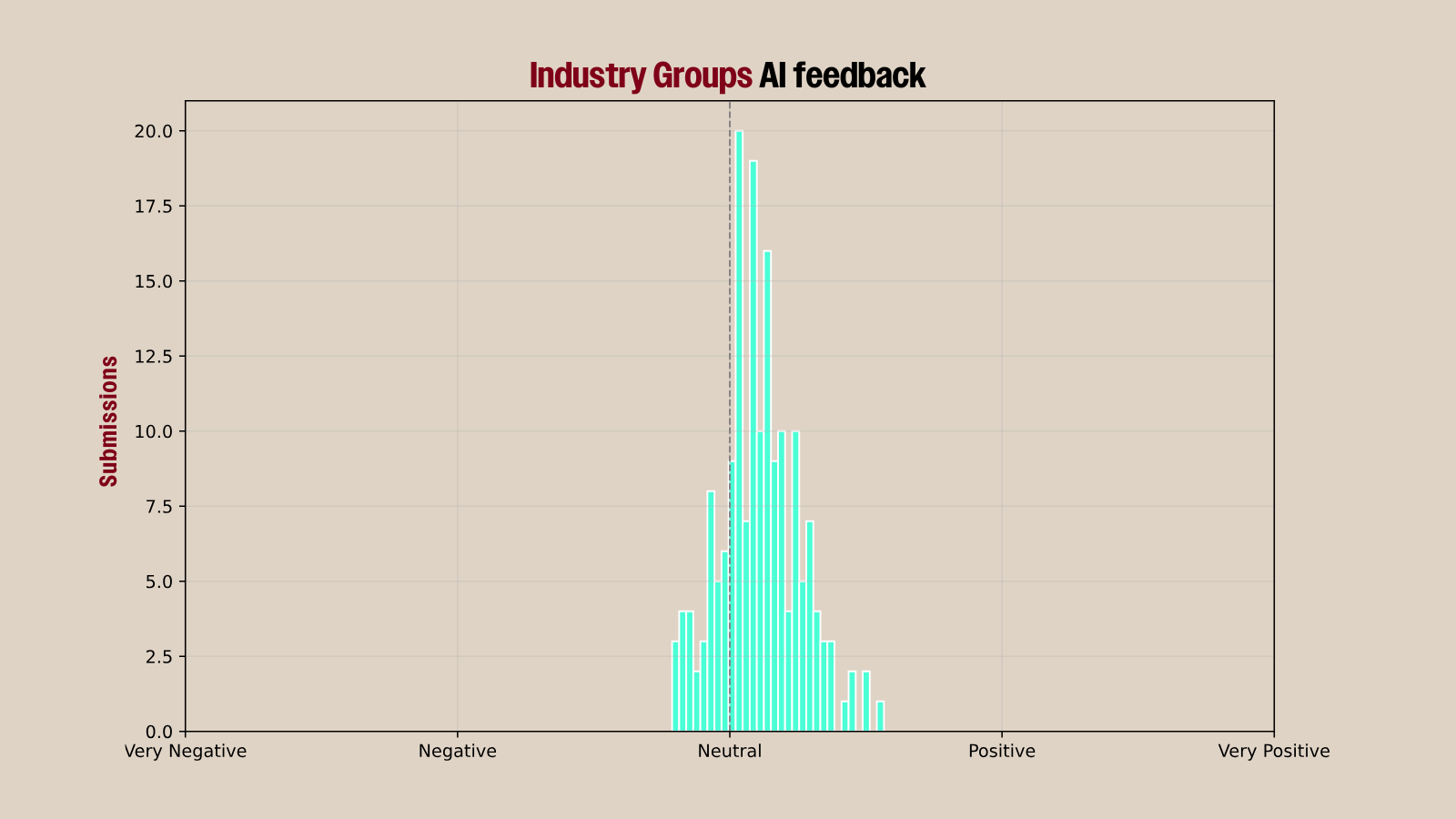

While more comments carried some level of uncertainty or negativity, those comments were not pervasively negative. But the comments that expressed positive emotions about AI tended to do so more strongly. When we normalized the data, we saw that public sentiment skewed quite evenly: The number of submissions expressing positive and negative views on AI was quite balanced.

This tracks with recent surveys on how Americans perceive AI. Two years ago, a majority of Americans said that they were more concerned about AI than excited, according to Pew Research. Last year, a majority of American respondents to a Gallup survey said AI would do an equal amount of harm and good. Of course, to write a public comment to the government you’ve got to be pretty publicly engaged to begin with, so the dataset is self-selecting — but it’s still a huge indication of public engagement that 9,000 individuals wrote to the government about its AI policy and that it wasn’t all doom and gloom. Interestingly, this also tracks with how Europeans view AI. In a recent study, 62% of Europeans surveyed said they believed AI will positively impact the economy, but 66% also thought that more jobs will be destroyed than created.

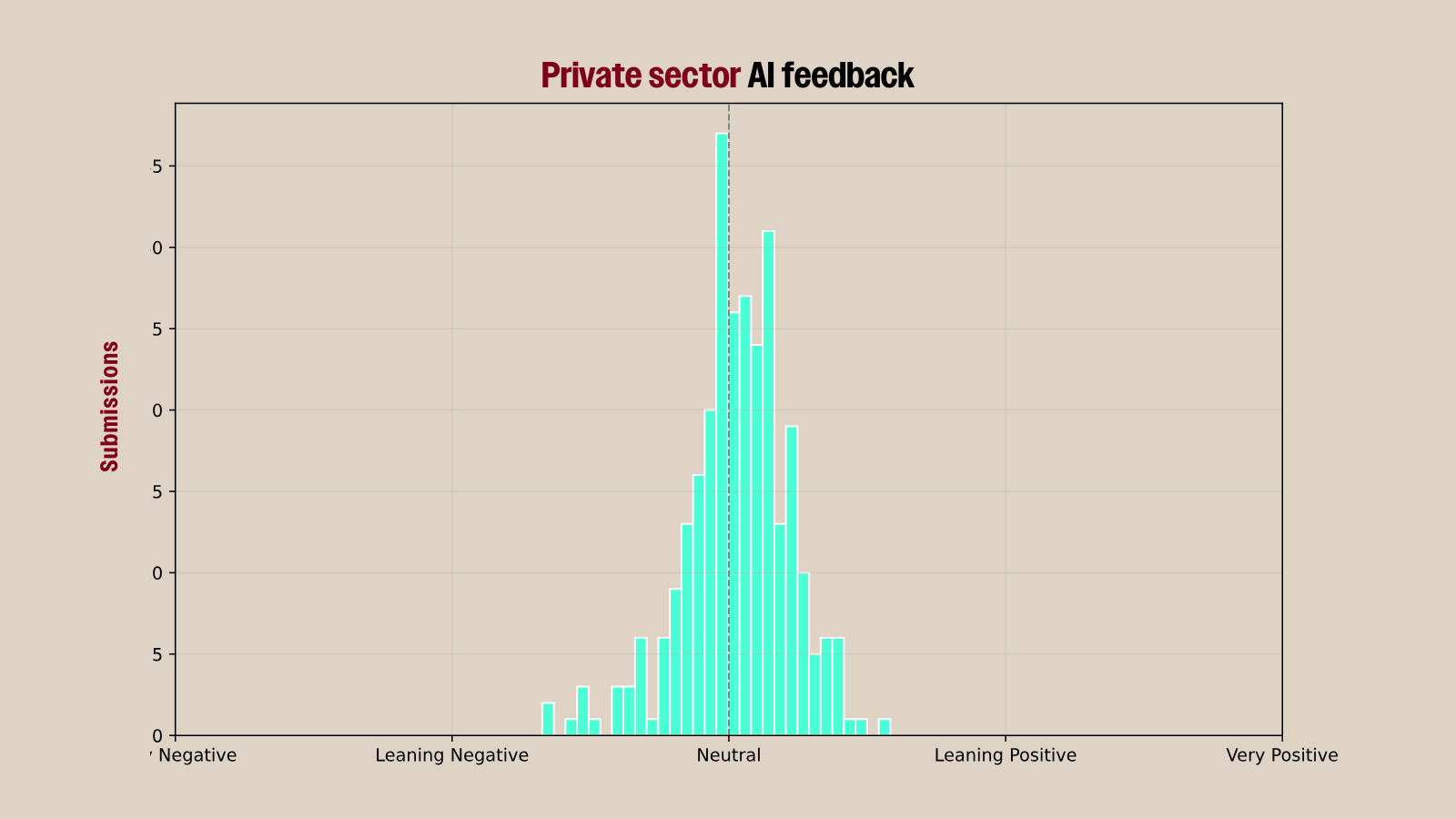

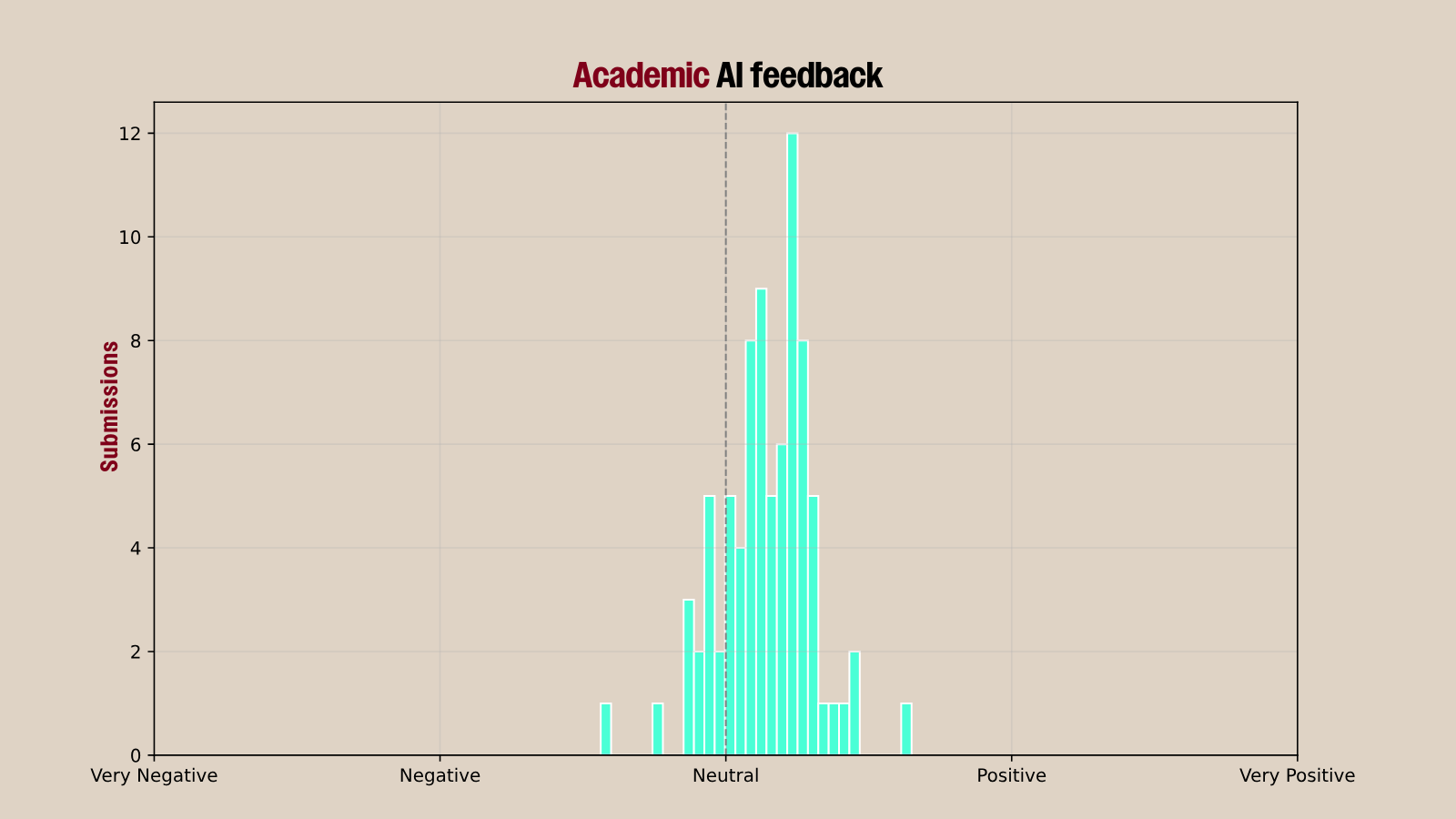

However, when we filtered for private sector, industry groups, academics and nonprofits, the AI feedback of these respondents leaned more positive—but still not overwhelmingly so.

How Companies Are Talking About Artificial Intelligence

While the algorithms gave us a top-level picture of the data, we dug into some of the views of the most important players in AI.

Open AI

OpenAI highlighted the role of AI in American national security strategy, emphasizing the AI race between the United States and China. “The Trump Administration’s new AI Action Plan can ensure that American-led AI built on democratic principles continues to prevail over CCP-built autocratic, authoritarian AI,” the nonprofit wrote.

It argued that DeepSeek is as significant a threat to the American economy as Huawei, the Chinese telecom giant that the first Trump administration banned from selling its products in the United States. OpenAI argued that DeepSeek also could be manipulated by the Chinese government to cause harm. To combat Chinese dominance in the AI race, OpenAI said the United States needs to reduce regulatory burdens, implement greater export controls and completely revamp its copyright policies to broaden its definition of fair use.

Many writers, publishers, artists and other creators are currently suing OpenAI for using their work to train models without permission or compensation. The company’s position that copyright law could hamper the development of AI models, and harm American innovation, echoes advice former Google CEO Eric Schmidt gave to a group of Stanford students last year, telling them that entrepreneurs should steal others’ data and then “hire a whole bunch of lawyers to go clean the mess up.”

Recording Industry Association of America, et al.

Some of the biggest trade groups in the creative world—such as the Recording Industry Association of America and SAG-AFTRA—jointly commented they want current copyright laws to stay in place. And if AI companies want to train models on creative work, they should have to license that content, just like OpenAI did with the Associated Press, Hearst and Condé Nast. While the details of those deals haven’t been made public, they show that it’s possible for AI companies and content creators to strike agreements over how creative work gets used.

Electronic Frontier Foundation

But not all groups are convinced licensing deals are the solution. “Mandatory licensing through copyright is unlikely to provide any meaningful economic support for vulnerable artists and creators,” the Electronic Frontier Foundation wrote in its submission. Its argument is that licensing deals, while helping big publishers, do very little for independent artists, highlighting how the music industry often fails to share payments with artists when it comes to streaming deals. EFF also highlighted the potential risks of AI from reproducing biases or other human rights violations. It warned that the government's rapid adoption of AI, as evidenced by the Department of Government Efficiency (DOGE) plan to replace federal workers with AI, could have unintended consequences.

EFF proposed implementing a robust notice-and-comment practice to give the public notice when agencies decide to use AI. “A public and transparent notice-and-comment process will help reduce harm to the public and government waste by working to weed out bogus products and identify applications where certain types of tools, such as AI, are inappropriate,” it wrote.

Future of Life

The Future of Life Institute, an effective altruism nonprofit, argued that the federal government should issue a moratorium on developing AI systems that could escape human control. It also advocated for developing AI “free from ideological agendas” and banning models that can engage in superhuman persuasion and manipulation. The risks of this type of manipulation have been made clear in recent weeks, as publications like Rolling Stone, have reported on sycophantic AI convincing individuals that they may be Messianic. Others have reported on AI bots convincing people to self-harm.

Anthropic

“We expect that the economic and national security implications of this technology will be tremendous,” wrote the AI company Anthropic, emphasizing the existential risks and possibilities of artificial intelligence. “[I]t is imperative that this technology be treated as a critical national asset.”

Similar to OpenAI, Anthropic argued for greater export controls on critical technologies. However, the company said that the federal government needs to invest in more evaluation and testing of powerful AI models, noting that its latest model “demonstrates concerning improvements in its capacity to support aspects of biological weapons development.” Anthropic also advocated for enhancing security at American AI labs to make it harder for bad actors to hack or steal frontier model data.

MistralAI

The French frontier AI lab also submitted a response to the request for feedback, but diverged from its US counterparts on several recommendations. Most crucially, MistralAI warned that “export controls can have unintended consequences.” It emphasized the need to balance national security with support for innovation and trade. “Imposing strong tariffs on AI models could hinder the development of new models from abroad that the U.S. can capitalize on to create new jobs, new development and economic growth at home,” the lab said.

It also advocated prioritizing open-source development of frontier models. Unlike the American labs, it emphasized international cooperation.