The Internet of Bodies is here. Are we ready for it?

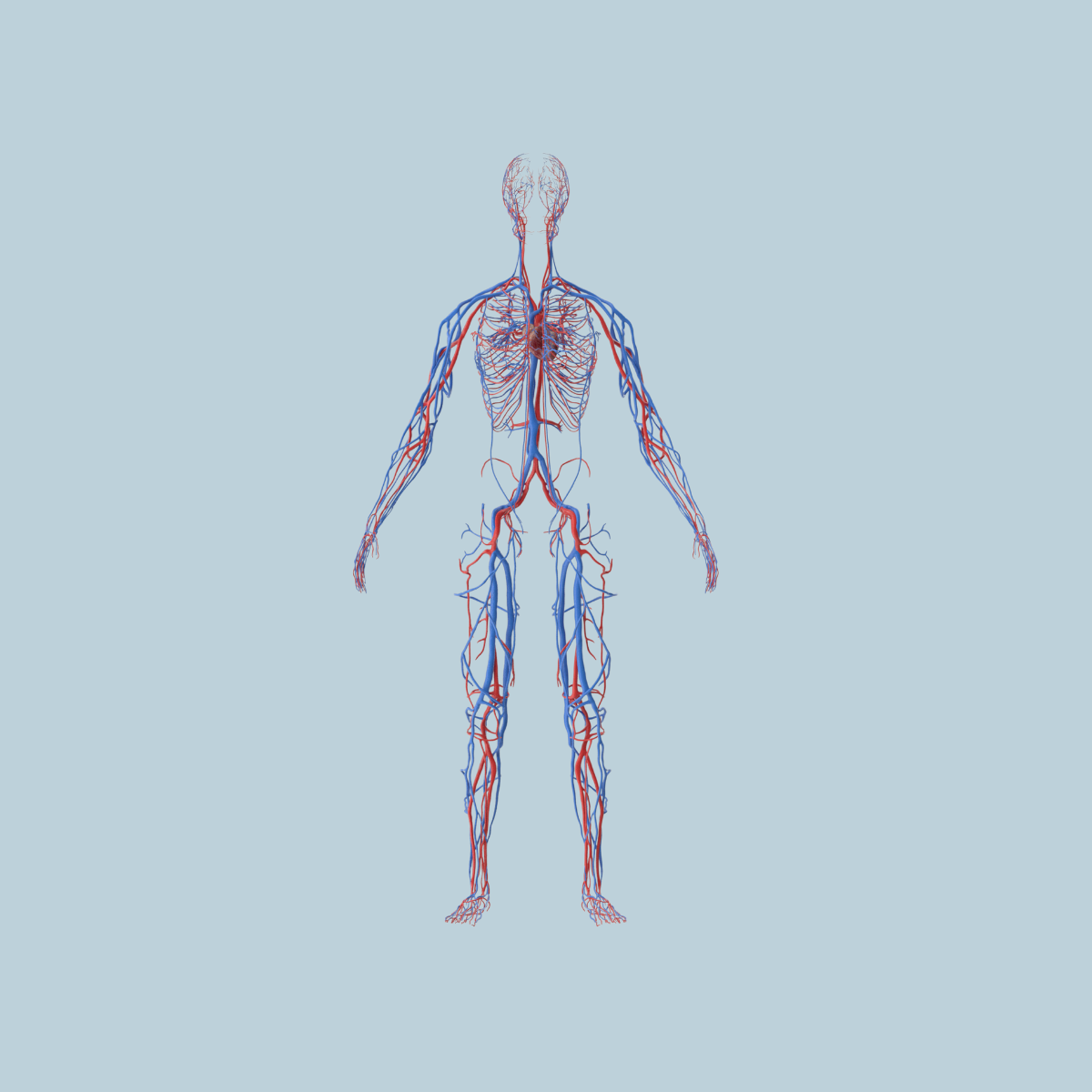

As we implant, ingest and wear smart devices, the line between human and machine blurs—along with our control over our own bodies.

COMMENTARY By Martina Le Gall Maláková

As uses for artificial intelligence proliferate ever more rapidly within our everyday lives, an ethical question is becoming apparent about the risks and benefits of merging with our technologies.

Like the Internet of Things, which connects objects to the internet, the Internet of Bodies connects you to the internet. The cyborg is more reality than science fiction. We are wearing, ingesting and implanting sensors all over our bodies, from vital health management devices (like pacemakers and insulin pumps) to smart watches. And AI plays a vital role in IoB, mediating the way these technologies interact with our bodies.

Once connected, data from these devices can be exchanged and our bodies could be remotely monitored, controlled and analyzed. The IoB may add value for human life and health, but it also has its risks.

There are, of course, the classic technological risks of cybersecurity and the misuse of our personal data. But there’s a secondary risk that’s at least as pressing: We risk losing ourselves to the addiction to our devices, and as we merge, what happens when a person is controlled via an AI-powered robot? This could result in loss of control over both one's body and one’s self. We must take into account the capacities of use and especially misuse of emerging technologies on society and humanity as a whole.

Frequently, when something new is created, we try to proceed according to established ethical values and principles, adhering to familiar basic rules. But we know from history that in many cases, at a certain point this will not be enough. As Internet of Bodies technology continues to grow, regulatory and legal issues will have to be resolved and policies built around the proper use of the technology.

What happens if IOB devices deviate from their supposed purpose? Or when predatory workplaces start mandating its use to track workers? Who do we hold to account when an AI-controlled device goes awry and causes actual harm?

The European Union’s Artificial Intelligence Liability Directive (AILD) is one attempt to answer that question, allowing people recourse when an artificial intelligence system causes damage to them. While the minutiae of the directive is still being debated, opposition to the act is rooted in concern that it will stymie European innovation.

We can already predict that, as we have seen with other emerging technologies, these issues will not be resolved in a timely manner. At the same time, if we limit or slow down the use of these technologies, a gray economy will emerge, where people will be able to purchase unauthorized versions of these tools unbeholden to rules, principles and controls. This is not a solution.

Together, we need to use all possible world organizations, platforms and forums to discuss this topic and, above all, to quickly adopt measures, rules and legislation to mitigate risks. This can only be achieved using a round table, with sufficient awareness of these emerging technologies and sufficient commitment to equality, ethics and human values—-for the future survival of our humanity and our planet.

Martina Le Gall Maláková is an expert on Data Free Flow with Trust on behalf of BIAC at OECD.